Why everyone is talking about Refik Anadol’s AI-generated ‘living paintings’

This year’s Frieze Week includes a slate of art openings at galleries around town — exhibitions apart from fair presentations. Hauser & Wirth is showing paintings by George Condo at its new West Hollywood space; Sprüth Magers is exhibiting paintings, sculptures, drawings, video and installation work by Anne Imhof; and Jeffrey Deitch is presenting digital artist Refik Anadol’s first major solo gallery exhibition in L.A., which opened Tuesday.

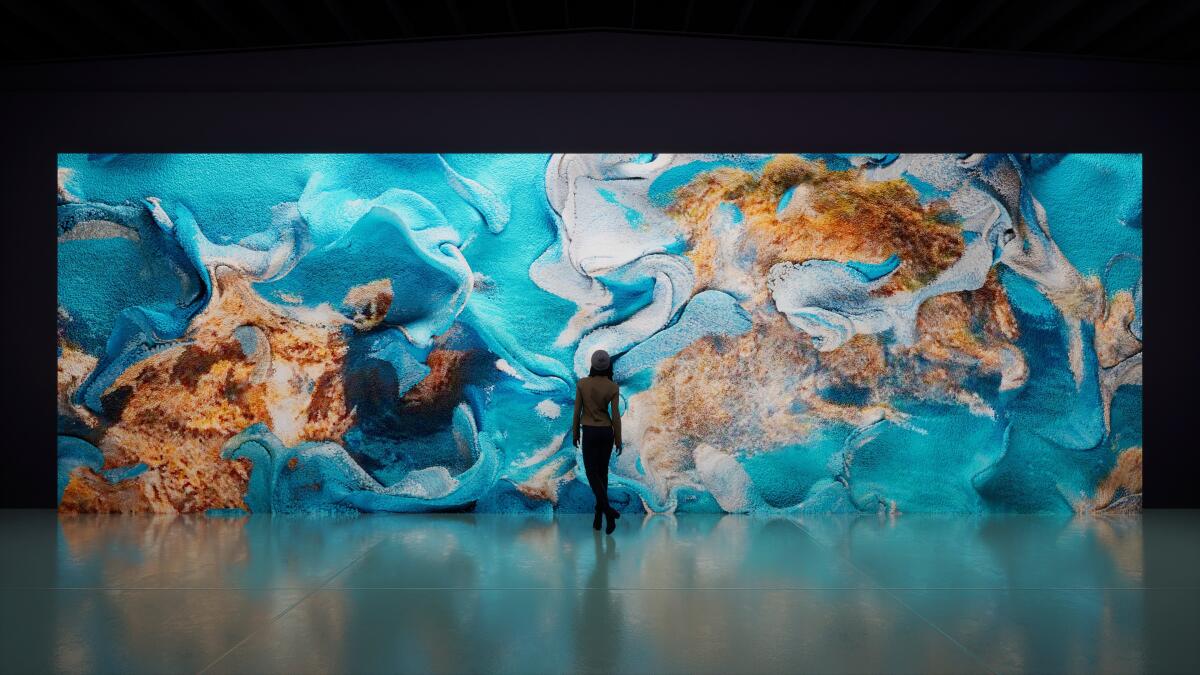

The show features Anadol’s hypnotic, AI-generated “living paintings,” which transform publicly available data and images into vibrant, abstract digital works swirling and whooshing within their frames. For a show that’s heavily tech-driven, the exhibition feels counterintuitively organic, collectively depicting AI reinterpretations of California’s natural environments. One triptych, “Pacific Ocean,” draws on high-frequency radar data images of ocean environments; “Winds of LA” is created from wind data collected from weather sensors; and two separate triptychs incorporate more than 153 million publicly sourced images of landscapes from California’s national parks.

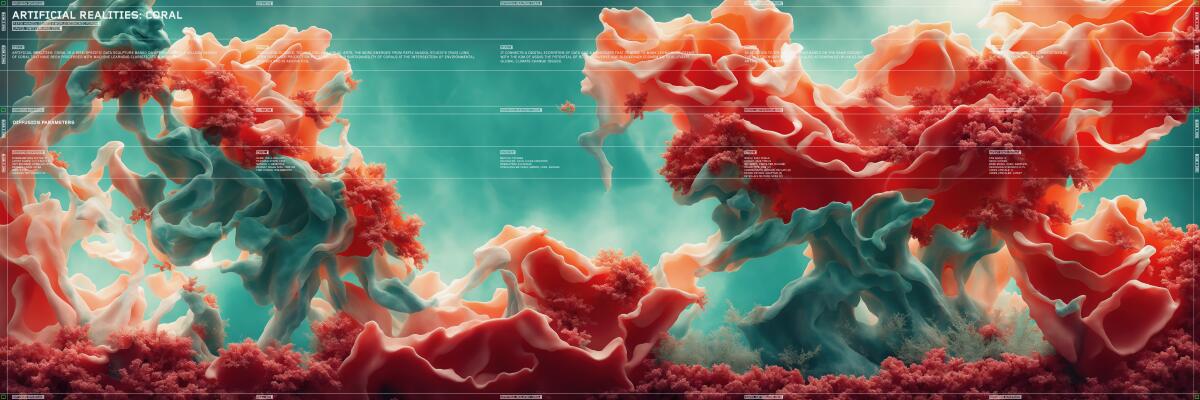

The signature piece, however, is where one can easily lose a chunk of the morning, even during an especially busy art week. Three of the aforementioned triptychs play on a loop in an immersive format on a 40-foot-wide, LED wall along with a fourth work, “Coral Dreams,” which is not otherwise on view in the show. Excerpts from Anadol’s 2021 “Machine Hallucinations” series, including “Coral Dreams,” served as the backdrop for this year’s Grammy Awards stage. In the intimate gallery space, the work feels no less dramatic.

The screen for this central work, “Living Paintings Immersive Editions,” falls flush against the ground, so that the digital imagery spills onto the gallery’s polished concrete floor, engulfing viewers in flashes of color, spools of white light and dynamic, jewel-toned shadows. Wave-like forms appear to swell beyond the screen. Sound designer Kerim Karaoglu’s soundscape — an AI-human collaboration — fills the room. The work is at times calming and meditative, at times invigorating and unsettling. The body reacts, with the breath quickening or falling suddenly heavy and slow. As of Saturday, the work will include a garden-like scent that will waft throughout the room and is, Anadol says, AI-generated.

The Istanbul-born artist , who has lived in L.A. since 2012, is also a computer programmer. His 16-person team, based in Frogtown, spends months sourcing data, then applies an algorithm to scrub the imagery of any human traces — no faces, body parts or personal details, such as names — for privacy reasons. Curating the images can take up to six months. Then the AI mind goes to work, using deep neural networks — “algorithms that have the capacity for learning,” Anadol explains in this edited conversation. “But while making the work, I have a lot of control — fine-tuning the parameters, like speed, form — even the AI learning rate. My hope is not to mimic reality or create a realistic copy of nature but to come up with something that feels like dreaming.”

We spoke with Anadol on the heels of the opening of his solo show.

The theme of this exhibition seems to be making the ephemeral visible, whether that’s weather patterns conveyed in abstract patterns or human emotions cast in physical, high-density foam. Where does this urge come from?

It comes from a very childish imagination. It started when I started playing with computers, creating software, at 8 years old. I’ve always believed there’s another world around us that we cannot perceive but that exists. If you think about data, sensors and machines, we know that they communicate with each other through signals. And signals are not visible, but we know they exist. [I want] to demystify that reality.

Do you consider yourself the auteur of the work in “Living Paintings,” or is it a human-machine collaboration?

It’s the second, a human-machine collaboration. Because it’s truly using AI as a collaborator. It’s kind of like creating a thinking brush. It’s actually more work [than not using AI]. Even though AI doesn’t forget … to create the story and narrative, still, it’s a human intervention.

You talk about working with data — wind speed currents, precipitation and air pressure, even brain waves — as if they were “pigment,” the way a painter works with paint. Can you elaborate on that?

When I think about data as a pigment, I feel like it’s always changing, always shape-shifting, it doesn’t dry. It’s constantly in flux. So I feel like data become a pigment, and that’s what the feeling will be. That’s one of the reasons, in the exhibition, everything is alive, in contrast to being frozen. I think it’s really representing this world, this reality, that’s around us that always changes. Always creating new meaning. Change and control in art-making is becoming more relevant.

Many people are skeptical of AI art. Others worry that the technology will devalue artists’ livelihoods. How do you respond to that?

I completely hear and agree that this technology can create potential harm and problems. And I know there are artists that are concerned about this. I completely hear them and understand them. But I also believe that the same technology can bring a new dimension and enhance the human mind. I’m not a wishful thinker, and I can hear and see all the problems, but because of these reasons, I am, all the time, training our AI models.

What is art is what happens after [the AI plays a role]. Personally, I spend more time with the AI findings, the AI outputs, so I don’t just use what the AI does. I personally spend more time after the AI created things. And I’m pretty sure many artists, at the moment, are imagining what else they can do with these new tools. I believe it’s also saving time and enhancing creativity. That’s one of the reasons, in our show, the largest artwork is actually a process wall, demystifying the AI decisions, showing the algorithms and so on. I spent so much time to demystify the AI.

You worked with the Neuroscape laboratory at UC San Francisco to create your “Neural Paintings,” which capture actual human memories that machine-learning algorithms then visualized. It’s vulnerable work but also particularly personal. Can you share what sparked it?

Unfortunately, in 2016, my uncle died of Alzheimer’s disease. That’s when I started thinking about, how can I preserve, without breaching privacy, the memories? The more childish question is: Can we touch our memories? Are they physical?

It’s [about] the similarities of our minds, it represents similar patterns. Even though memories are personal and unique for us, still there’s a pattern that we all create together in a similar way, and these three portraits are representing our similarities.

What do you think of LACMA’s recent announcement of a gift it describes as “the first and largest collection of artworks minted on blockchain to enter an American art museum?”

I think it’s amazing. I’m so happy that finally museums are recognizing the movement — it’s a bright signal for the future of the field. It means that museums are trusting the medium; it means that blockchain technology is validating itself; it means that digital art is [being] recognized.

What was it like to create the backdrop of the Grammys stage with your art — and what’s your take on Beyoncé losing out to Harry Styles for album of the year?

I am deeply, deeply honored for such important recognition. Six months ago, I got the call from the executive producers, and they said it was the very first time they were using AI and the very first time they were collaborating with a visual artist on this level, so that was very exciting. I was so happy to see the piece on such a major scale. But I barely follow the details [of music]. I mostly listen to AI music or classical music. But they’re both giants.

You have plans to open an AI museum, Dataland, in downtown L.A. next year — what is that about?

That’s the next dream, the big dream. For five years I have been dreaming about this. I’ve always struggled to find institutions or spaces that fit [my] dreams. I thought perhaps it’s a great time to find a way to reinvent this new type of experience. I’ve seen lots of immersive experiences, but I’m trying to do something completely different — and with AI and data and new ways of imagining the future. It will be a major location — in three or four weeks we’re locking down the details.

But I can say it’s a cultural destination, and it will make a major impact. It [will feature] many collaborations, between many people across the world and across disciplines — researchers, artists, musicians. Immersive environments, simulations, multisensory. We’ll be exploring sound, image, text and the cutting edge of generative AI. My hope is to create inspiration, hope and joy.

"Refik Anadol: Living Paintings"

Where: Jeffrey Deitch, 925 North Orange Drive, Hollywood

When: Tues.-Sat., 11a.m. - 6p.m., through April 29.

Cost: Free

Info.: deitch.com

More to Read

The biggest entertainment stories

Get our big stories about Hollywood, film, television, music, arts, culture and more right in your inbox as soon as they publish.

You may occasionally receive promotional content from the Los Angeles Times.