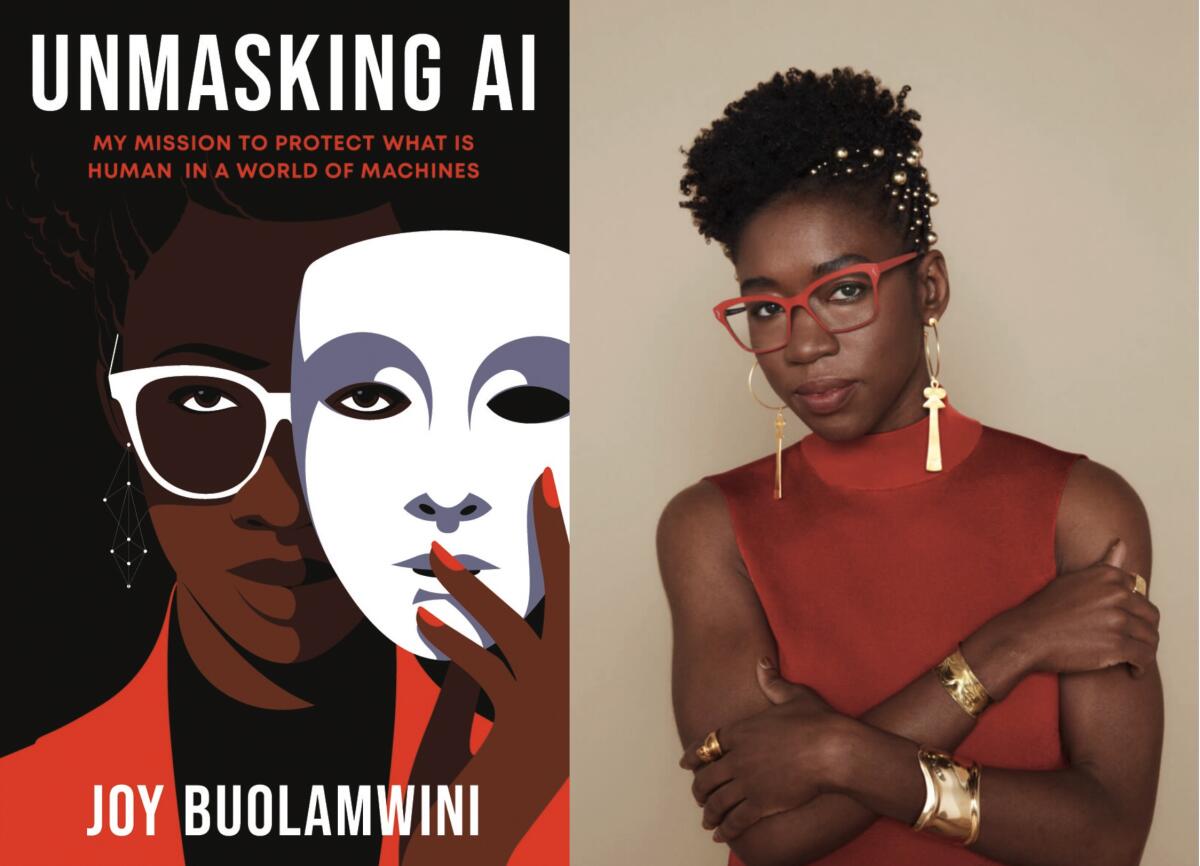

Column: She set out to build robots. She ended up exposing big tech

Joy Buolamwini, author of “Unmasking AI: My Mission to Protect What Is Human in a World of Machines,” joins the L.A. Times Book Club on Nov. 14.

When Joy Buolamwini set off for college, her ambitions, she thought, were pretty straightforward. She wanted to pursue robotics and build cool tech.

The child of first-generation Ghanaian immigrants — her mother was an artist, her father a medical chemist focused on drug discovery — she got hooked on computers as soon as her dad used them to share his work with her. A science show on robots inspired Buolamwini to set her sights on MIT, and before long, she’d learned to code. After high school, she made her way to Georgia Tech.

But Buolamwini soon found herself confronting what she’d later come to term “the coded gaze” — technological systems that did not function for her, or recognize her as equal to her white peers, because of the color of her skin.

The bias and racism she encountered as a young, Black, female student of computer science was not necessarily the result of targeted malice. It was, perhaps, something even worse. It was a sort of prejudice that was deeply encoded in the very systems that most people considered to be impartial, even incapable of bias. Systems that increasingly determined the rules for how people live and work — whether we get hired for a job, get into college, are able to access crucial medical care or even are accused of a crime.

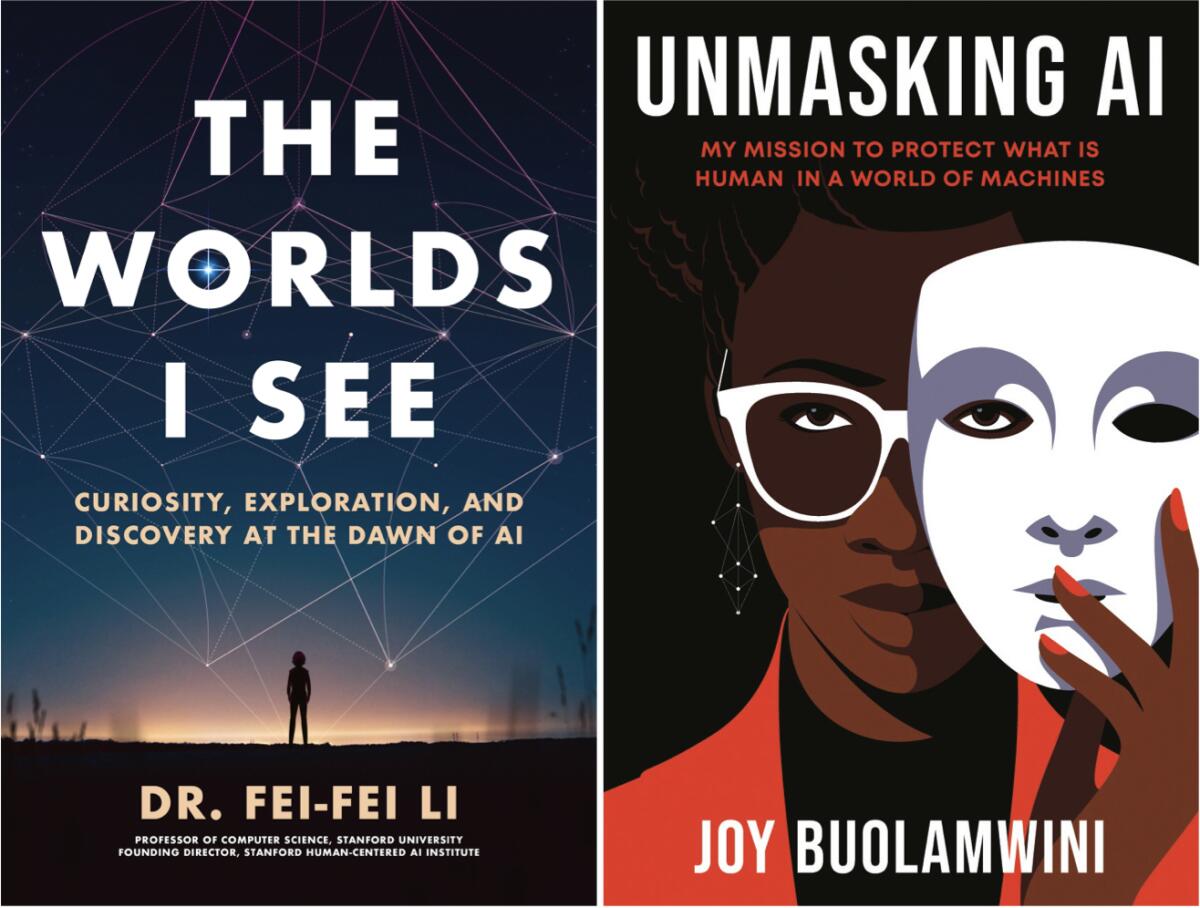

Buolamwini, now a world-renowned scholar of tech harms and biases and founder of the Algorithmic Justice League, has just published her first book, “Unmasking AI: My Mission to Protect What Is Human in a World of Machines.” On Nov. 14 she joins the L.A. Times Book Club to discuss “Unmasking AI” as part of a livestreamed conversation about the growth of AI and its impact on our humanity.

Join our community book club. Our mission is to get Southern California reading and talking.

Buolamwini’s book recounts her journey to become one of the nation’s preeminent scholars and critics of artificial intelligence — she recently advised President Biden before the release of his executive order on AI — and offers readers a compelling, digestible guide to some of the most pressing issues in the field.

The cover of “Unmasking AI” is a nod to one of Buolamwini’s most lauded interventions, in which she powerfully demonstrated how computer vision programs failed to detect the presence of her face — but succeeded when she placed a white mask over it. Yet her first run-in with the coded gaze came years before, as an undergrad at Georgia Tech, in the early 2010s, as she was working on an assignment she called Project Peekaboo.

“I had a robot, Simon the robot, and the idea was to see if I could have it play a turn-taking game with me,” Buolamwini tells me in an interview. “The hope was, maybe in the future, you could have robotic teddy bears that interact with children. And depending on those interactions, you might be able to detect early developmental disorders and have earlier intervention.”

The problem was, she couldn’t get the computer vision technology to recognize her face. “So as an undergrad, I’m thinking, ‘OK, these technologies are still in the lab. So yes, sometimes it’s going to work, sometimes it’s not going to work.’” She borrowed her roommate, who was white, and used her to complete the assignment. “And just like that, it worked.”

Fei-Fei Li, author of ‘The Worlds I See’ and co-director of Stanford’s Human-Centered AI Institute, joins the L.A. Times Book Club Nov. 14.

She brushed off the incident, but before long, she ran into the dilemma again. Buolamwini was in Hong Kong on a team representing Georgia Tech at an international entrepreneurship competition, where she was given a tour of a science park. There, she encountered another robot using facial detection. “And I ran into the same issue I’d run into in my dorm room in Atlanta, at Georgia Tech, miles and miles away,” she says. “I started a talk shop with the team. And I found that we were using the same coding libraries.”

Still, she thought, it was early days for the technology, little cause for alarm, and again looked past the incident and toward the future.

Until, a few years later, when Buolamwini had finally landed at her dream post, as a scholar in MIT’s Media Lab, and she ran into a variation of the same problem again.

Buolamwini was working on a project she was calling the Aspire Mirror. The idea was to use face-tracking software to register movements of the user’s face and translate them into movements of another, digitally processed aspirational face — an image of Serena Williams, in Buolamwini’s early experimentation — and then project them onto a piece of half-silvered glass. The result would look like another being’s face was staring back at you, moving as you moved.

It used to be getting your book ‘listed’ was good for authors. Then the Atlantic made a searchable list of what’s being used to train AI to replace us.

Except, yet again, the facial recognition system would not consistently register Buolamwini’s face. It did, she realized, work when she put a white mask over her face.

“That experience of putting on something less than human to be seen as human by a machine is what sparked my curiosity,” Buolamwini says, “and led to the term the coded gaze — but also led to research looking at bias in not just computer vision systems, machine learning systems, but AI more broadly.”

It turned out that facial recognition systems like those she’d been encountering her entire academic life had been developed and trained primarily on faces that looked a lot like the people who had done the training and developing — that is, white and male, like the majority of the American tech industry. The flaws could no longer be blamed on the tech’s newness; companies like Facebook were releasing papers arguing that their facial verification technology was over 97% accurate. The problem was who the systems had been designed for, and who they had been tested on.

It was a clear example of what would soon come to be known as algorithmic bias — systems that prefer one group over another because they’ve been designed with or trained on biased data — and the genesis of Buolamwini’s coinage of “the coded gaze.”

“The coded gaze borrows from the term male gaze and white gaze,” Buolamwini tells me. “All of these terms truly get at power — who has the power to shape what the priorities are, what the preferences are, and how these prejudices get baked into our system.”

Until then, Buolamwini had still been unsure whether she wanted to make critical work her focus as a scholar — she still harbored that inner roboticist that just wanted to build great new stuff. But the problem had become undeniable, adoption of facial recognition software was on the rise, and Donald Trump had just become president, issuing to many a grim portent of the potential abuses of such power.

“That led me to say, ‘OK, let me really focus in on this curiosity I have.’ Because as a scientist, I can’t just say, ‘This tech is biased. It’s racist.’ I had enough sample data points, just from my own experience, trying out things, but I now wanted to do a rigorous evaluation.”

Investigating and combating algorithmic bias — and the host of harms caused by AI systems — became Buolamwini’s focus, and she became a pioneering scholar and activist in the field. She founded the Algorithmic Justice League, delivered TED Talks and co-authored a landmark paper with the great AI researcher Timnit Gebru that revealed that facial recognition systems deployed by Microsoft, Amazon and Facebook failed to correctly identify women, and especially women of color, far more frequently than they did white men.

Now 33 and living in the Boston area, Buolamwini is the subject of an award-winning PBS documentary, “Coded Bias,” that brought the above ideas to an ever-wider audience. She advises the United Nation. She flies to Davos. She offered advice to President Biden in the runup to the executive order on AI safeguards that he announced Oct. 30. And she’s expanded her focus from biases to the harms caused by systems more broadly.

So what’s changed since she set out to address the coded gaze? Is she seeing more awareness of the issue among the public and in the halls of power?

“This is part of why I wrote the book,” Buolamwini says. “Yes, in policy circles, and in tech circles, there is increased awareness, not just about AI bias, which the gender shades paper shows, but also AI harm, because you could have accurate systems that can be abused. Think of a drone, a gun, a camera, facial recognition.”

All are systems increasingly imbued with AI and automation — systems that can cause great harm even, or especially, if they’re working as intended. “And so it’s really about starting to not just think about bias, which is still prevalent, but also thinking about the harms of systems.”

Yet most Americans, she says, are still in the dark about many of these issues. And the harms of such systems are harder to make obvious, at least in memorable presentations that stick in the gut. “When you have AI systems that determine if you get hired or fired, if you get admitted into college, or informs the decisions if you have access to medical treatment,” she says, “those types of systems are much harder to see.”

As Buolamwini well knows, in the white-hot world of AI, there’s plenty of unmasking yet to be done.

Book Club AI Night

What: Fei-Fei Li, author of “The Worlds I See,” and Joy Buolamwini, author of “Unmasking AI,” will be in conversation with Times audio head Jazmín Aguilera. Times technology columnist Brian Merchant, author of “Blood in the Machine,” joins the discussion.

When: Nov. 14 at 6 p.m. Pacific

Where: This free virtual event will livestream on YouTube, Facebook and X (formerly Twitter). Sign up on Eventbrite for direct links and signed books.

Join: Sign up for the book club newsletter for the latest events and book news at latimes.com/bookclub.

Do you enjoy our book conversations? Here’s how to support the Los Angeles Times Community Fund and the newspaper’s literary and literacy programs.

More to Read

Sign up for our Book Club newsletter

Get the latest news, events and more from the Los Angeles Times Book Club, and help us get L.A. reading and talking.

You may occasionally receive promotional content from the Los Angeles Times.