Driver in Tesla crash relied excessively on Autopilot, but Tesla shares some blame, federal panel finds

A Tesla car in Autopilot mode warned its driver seven times to put his hands on the steering wheel during the 40 minutes before the crash that ended his life last year, NTSB reports say. (Sept. 12, 2017)

A fatal 2016 crash involving a Tesla sedan was caused by the driver’s over-reliance on his vehicle’s Autopilot system and by a truck driver’s failure to yield while entering a Florida roadway, a federal panel determined Tuesday.

But the National Transportation Safety Board also laid some blame on Tesla Inc. in the long-awaited findings of an investigation into the first-known fatal accident involving semiautonomous driving technology.

The board said Tesla’s Autopilot contributed to the crash. The software of the Tesla Model S “permitted [the driver’s] prolonged disengagement from the driving task” and let him use the Autopilot system on the wrong type of road.

The NTSB also said technology that senses a driver’s hands on the wheel -- such as that used by Tesla -- is not an effective way to tell whether the driver is paying attention.

“In this crash, Tesla’s system worked as designed, but it was designed to perform limited tasks in a limited range of environments,” the board’s chairman, Robert L. Sumwalt, said after the board voted 4 to 0 on the probable cause of the crash and staff recommendations for avoiding future crashes.

“Tesla allowed the driver to use the system outside of the environment for which it was designed ... and the system gave far more leeway to the driver to divert his attention to something other than driving,” he said. “The result was a collision that, frankly, should have never happened.”

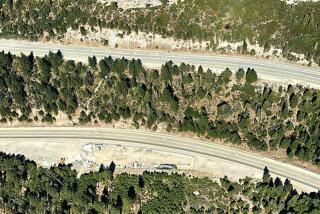

Joshua Brown, 40, died when the Tesla Model S sedan he was driving smashed into the trailer of a big-rig truck that was making a left turn in front of it from a cross street. Brown was traveling 74 mph using the Tesla’s semiautonomous Autopilot feature, which did not identify the truck and stop the vehicle.

Tesla, which is based in Palo Alto and led by Elon Musk, said that “the safety of our customers comes first” and that its Autopilot technology “significantly increases safety” and reduces crashes.

The company cited a January report by the National Highway Traffic Safety Administration that found a 40% drop in crashes after an automatic steering feature was included in Tesla cars. That report also concluded Tesla’s Autopilot had no safety defects and no recall was needed.

“We appreciate the NTSB’s analysis of last year’s tragic accident and we will evaluate their recommendations as we continue to evolve our technology,” the company said in a written statement. “We will also continue to be extremely clear with current and potential customers that Autopilot is not a fully self-driving technology and drivers need to remain attentive at all times.”

The NTSB’s staff extensively studied the crash and issued its findings as the automotive industry accelerates its development of self-driving vehicles.

The Trump administration said Tuesday that it was loosening rules on the development of driverless cars. The announcement came less than a week after the House passed legislation that would exempt automakers from some safety standards and permit each of them to put as many as 100,000 self-driving vehicles a year on U.S. roads.

The NTSB recommended that makers of semiautonomous vehicles develop a way to prevent the use of Autopilot-style technology when their vehicles are on roads that are not appropriate for the technology.

“A world of perfect self-driving cars would eliminate tens of thousands of deaths and millions of injuries each year,” Sumwalt said. “It is a long route from being partially automated vehicles to self-driving cars. Until we get there, somebody still has to drive.”

The staff said the Autopilot system functioned as designed during the May 2016 crash in Williston, Fla. But the technology was not meant to be used on the type of road on which the crash occurred. The Tesla owners manual says Autopilot should be used only on highways or limited access roads that have onramps and offramps.

The Florida crash took place on a state road that had access from cross streets. The NTSB staffers said that they thought Brown was very knowledgeable about the vehicle, but that the owners manual could be confusing.

“A driver could have difficulties interpreting exactly [on] which roads it might be appropriate” to use Autopilot, said Ensar Becic, an NTSB human performance investigator.

The staff recommended that Tesla and makers of other semiautonomous vehicles use satellite data to determine the type of road their vehicles are on and that they allow Autopilot-style technology to be used only where appropriate.

The Tesla software did not detect the truck crossing in front of Brown’s vehicle. NTSB staff dismissed the idea — floated last year by Tesla — that the car’s sensors were unable to detect the white truck because it was against a bright sky.

Tests by the National Highway Traffic Safety Administration determined that Tesla and other vehicles with semiautonomous driving technology had great difficulty sensing cross traffic.

“The systems don’t detect that type of object moving across their path with any reliability,” said Robert Molloy, director of the NTSB’s Office of Highway Safety.

The staff is recommending the use of vehicle-to-vehicle technology — in effect, vehicles talking to each other — to sense cross traffic.

The NTSB staff also said that Tesla’s reliance on sensing a driver’s hands on the wheel was not an effective way of monitoring whether the driver was paying attention.

Tesla has repeatedly called Autopilot an “assist feature.” It has said that while using Autopilot, drivers must keep their hands on the wheel at all times and be prepared to take over if necessary.

“Since driving is a largely visual task and the driver may interact with the steering wheel without assessing the environment, monitoring steering wheel torque is a poor surrogate for monitoring driving engagement,” Becic said.

The NTSB staff recommended the use of a more effective technology to determine whether a driver is paying attention, such as a camera tracking the driver’s eyes. A possible example — not mentioned by the staff — is Cadillac’s Super Cruise steering system, which includes a tiny camera that tracks eye and head movement to make sure the driver is paying attention to the road.

The panel’s review found that Brown’s last interaction with the vehicle as he drove was 1 minute and 51 seconds before the crash, when he set the cruise control function at 74 mph.

There was no indication the driver of the Tesla or the driver of the truck took evasive action before the crash, the NTSB staff said.

The driver of the truck, Frank Baressi, 63, refused to be interviewed by police after the crash, the NTSB said.

A blood test 90 minutes after the crash indicated he had used marijuana, but the NTSB determined that “his level of impairment, if any, at the time of the crash could not be determined from the available evidence.”

Before Tuesday’s meeting, Brown’s family said in a statement that he loved technology and that “zero tolerance for deaths would totally stop innovation and improvements.”

“Joshua loved his Tesla Model S. He studied and tested that car as a passion,” the family said in the statement issued Monday by Cleveland law firm Landskroner Grieco Merriman.

“We heard numerous times that the car killed our son. That is simply not the case,” the statement said.

Twitter: @JimPuzzanghera

ALSO

Driverless-car rules loosen: U.S. regulator stops demanding safety assessments

The new iPhone and its competitors hope to make laptops obsolete

Pot delivery by drone? California cannabis czars put the kibosh on stoner pipe dream

UPDATES:

4:10 p.m.: This article was updated with additional background about self-driving vehicles.

11 a.m.: This article was updated with details about an earlier investigation by the National Highway Transportation Safety Administration and information about the driver of the truck involved in the crash.

10:05 a.m.: This article was updated with comment from Tesla.

9:35 a.m.: This article was updated with the NTSB’s conclusions.

8:55 a.m.: This article was updated with additional details from the hearing, including discussion of “appropriate” roads.

This article was originally published at 8:05 a.m.

More to Read

Inside the business of entertainment

The Wide Shot brings you news, analysis and insights on everything from streaming wars to production — and what it all means for the future.

You may occasionally receive promotional content from the Los Angeles Times.